Here is a recent letter concerning Regent Exams and a teacher's SLO score (forgive the formatting; it is copied from a PDF).

May 24, 2013

Dr. John B. King, Jr.

New York State Commissioner of Education

New York State Education Department

89 Washington Avenue Albany, NY 12234

Dear Commissioner King:

I am writing in response to Ken Slentz’s letter of May 22, 2013, in which he attempts to clarify some of the questions and concerns still remaining around the scoring of SLOs. While the memo might have sought to clarify, I assure you the field questions SED making this change particularly this late in the school year. The SED memo contradicts previous guidance on this issue to practitioners. This latest change in direction is yet another example of the Department’s failure to communicate with those most directly impacted by the changes. The lack of professional regard for practitioners is apparent, further eroding the tenuous relationship SED has with those in the field. As to NYSUT’s concerns: The understanding in the field since September, based on SED guidance, was that if a student does not sit for a Regents exam, there would not exist a second set of data from which to calculate a growth score for the student and, therefore, the student would not be counted in a teacher’s SLO. SED has now made a distinction between being absent on the day of the assessment and not qualifying to sit for the exam. This distinction is contrary to how these students are treated on the New York State School Report Card Student Performance Science Regents Data. This new determination will affect science teachers exclusively. SED’s letter states that students who do not qualify to sit for a Regents exam because they did not meet the minimum number of required lab hours would still count in a teacher’s SLO HEDI results. In this case, the student would receive a ‘0’ and that ‘0’ would be factored into the teacher’s final summative SLO rating. This rule puts Regents-level science teachers at a huge disadvantage across the state because they are the only group of teachers with a mandatory lab requirement. Only science Regents courses have a qualifying requirement that is out of the teacher’s control. A student could be failing other courses and still take the Regents and possibly score well. The science students who don’t qualify because of the lab requirement might be passing the course, and if they were permitted to take the Regents exam, they might do well.

Assigning a zero to something that was not completed unfairly skews the statistical results that are so important to accurately measure the variable at hand (in this case teacher effectiveness). A student who fails to meet the qualifying lab requirement is disqualified from demonstrating his/her knowledge of the subject content and that disqualification now requires the teacher to receive a ‘0’ for that student’s score. The ‘0’ says nothing about the teacher’s effectiveness because there is no assessment to measure student content knowledge. It is based solely on a student meeting the lab requirement. SED guidance on SLO’s has been lacking in detail, and much of the responsibility for the SLO process has been passed to districts. It is no wonder that this “rule” has the field up in arms as it conflicts with SED’s limited guidance on SLOs (revised March 2012) which states that SLOs must measure two points in time for the same student. More recently on the EngageNY web site, the May 9 webinar (http://www.engageny.org/resource/slo-results-analysis-webinar) states in at least three places that a student must have two data points – a pre-assessment and a postassessment – in order to be factored into a teacher’s SLO. At the 10:45-10:53 minute mark, the webinar states: "Only where students do not have two scores will they be uncounted for purposes of calculating (SLO) outcomes." If a student does not take a post-assessment, then she/he will not have these two data points. Throughout the guidance, there is no distinction made between the reasons for not having two scores, and there is no reference to not qualifying to sit for the assessment. To further support the understanding in the field, at the May NTI training, a BOCES NTI team determined that they all had similar interpretations of the issue and shared the following on the Science listserv: “Our best thinking is that the language states that calculating the SLO requires data from two points in time. If students cannot sit for the exam, they are not calculated in the SLO.” SED goes on to say, in their letter to NYSUT, that it is the responsibility of the teacher to ensure that all students meet the lab time requirements so that they are able to sit for the Regents exam. This statement shows the gross lack of understanding of the fact that often the teacher of the Regents course is not the lab teacher. The letter further states that, “The Department recommends that districts/BOCES create processes that ensure students have opportunities to make up lab requirements.” Again, SED seems to be unaware of how districts schedule for these labs and the resources they provide to students throughout the year. Districts build into the school schedule more than the necessary minutes to meet the lab requirement of 1,200 minutes. Teachers offer make-up lab times to students during study halls, lunch and after school. The reasons students do not meet the lab requirement are predominantly out of the control of the educator.

SED is instituting a policy change at the eleventh hour that contradicts their own guidance. This places science teachers in New York State at an unfair disadvantage. There is a readily available solution: Students who do not qualify to sit for a Regents exam because they did not meet the minimum number of required lab hours should not count in a teacher’s SLO HEDI results. To say that teachers are dismayed by this latest reversal in policy would be a gross understatement. This takes the already strained relationship that exists between SED and practitioners to a new level. Our intent with this letter is, to once again, urge SED to exercise common sense. NYSUT urges SED to re-evaluate this latest change and make it consistent with all previous guidance.

Sincerely,

Maria Neira c: Members of the Board of Regents Ken Slentz, Deputy Commissioner

MathJax

24 May 2013

23 May 2013

How the Many Worlds Interpretation of Quantum Mechanics Almost Cost Me my Job

End of the Year

Time permitting, I like to end the academic year with Special Relativity with an emphasis on how it allows time travel. We then watch the Nova episode Time Travel with Kip Thorne. One segment uses the Many Worlds (MW) interpretation of Quantum Mechanics to suggest that the single-photon double slit experiment shows that time travel is possible between parallel universes.

DAVID DEUTSCH: The photon that we can't see is a photon in a parallel universe

which - a nearby parallel universe which is interacting with the photon in our

universe and causing it to change its direction. The result of these single

photon inference experiment is the strangest thing I know. It is conclusive

evidence that reality does not consist of just a single universe because that

result could not come about unless there were another nearby universe

interacting with ours.

NARRATOR: If true, this idea has profound implications for time

travel.

DAVID DEUTSCH: When one travels back in time one does not in general reach the

same universe that one starts from. One reaches the past of a different

universe.

To illustrate MW (that when an action has different possible outcomes, the universe splits so that each possible outcome happens in a different universe), I grab a ball from my desk, wind up, and "throw" it at a student (I actually drop it behind my back). There is a collective gasp and then relief that no ball was thrown. I then tell them that according to MW, there is a universe where I did throw the ball. We all giggle a little and move on.

This year, in my last class, the ball didn't drop behind me. Fortunately, I narrowly missed a student. After a moment of shock and then profuse apologies, I got back on track and said "In another universe, I didn't miss. (pause) And I am now being lead away in handcuffs. (pause) And because I can't grade your finals, you all get 100's."

Next year, I'll use a foam ball.

18 May 2013

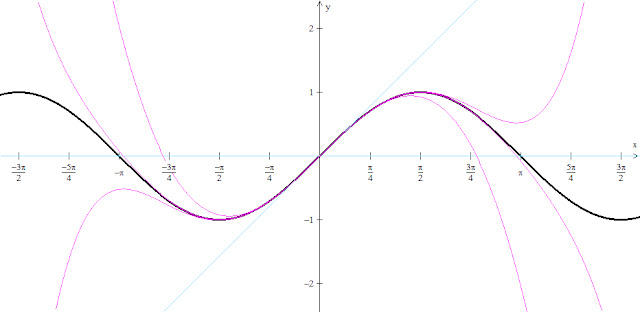

Not Taylor, but Cordic

There are certain "Wow" moments in the education of a mathematics student. For me, they include Cantor's discovery of different levels of infinity, Gödel's Incompleteness Theorem, and the Lancaster War Model. But on most student's list will be the Taylor Series.

Geometry and Arithmetic

To see that a geometric quantity (the sine of an angle of a right triangle), the ratio of two lengths, can be calculated with an arithmetic formula is astounding. And to implement this formula with only a few lines of computer code boggles. It is then tempting to assume that this is how your calculator does its calculations. And it probably would be except for one fact; your calculator is dumb.Addition is easy, Multiplication is Hard

Since a calculator at its core uses binary arithmetic, it can easily do addition. You probably learned how to do it grade school. But multiplication is harder, with one exception. It is easy to multiply by 2 (10 in binary). Let's look at a simple example, 5 x 2 = 10. In binary, it is 101 x 10 = 1010. To multiply a binary number by 2, all you need do is shift the digits to the left, and shifting bits is very easy for a calculator's CPU to do.The problem with using the Taylor series is that calculating just the first 5 terms of the sine expansion requires more than 50 multiplications and divisions. However, there are other ways of calculating the sine of an angle that, while harder for humans to do, are easier for computers.

COordinate Rotation DIgital Computer

An alternate method of calculating sine (and other transcendental functions) was developed in the 1960's that uses multiplications and divisions by 2. The details are beyond the scope of this post but can be found using the links below. At its heart is the decomposition of the angle into the sum of a set of specific angles. Past threads on Graph-TI asked about the internal methods

used to compute trigonometric and other transcendental functions.

We wanted to add some specific information to this dialog so that

someone could perhaps develop a short module for the classroom if

they would like. This topic should be interesting for background

on calculators or as a good example of a math application.

Most practical algorithms in use for transcendental functions

are either polynomial approximations or the CORDIC method. TI

calculators have almost always used CORDIC, the exceptions being

the CC-40, TI-74 and TI-95 which used polynomial approximations.

In the PC world, the popular Intel math coprocessors like the

8087 use CORDIC methods, while the Cyrix 83D87 uses polynomial

methods. There are pros and cons to both methods.

The details of CORDIC can be found at various sites with a few below.

Links

A paper on the CORDIC algorithm for the general student (from the site that hosts WinPlot which was used to generate the opening graphic)http://math.exeter.edu/rparris/documents.html

http://math.exeter.edu/rparris/winplot.html

Sadly, Mr. Parris passed away a few years ago. The school recently de-activated his account. The paper can now be found here.

A paper from the Texas Instruments Calculator division about how their calculators use CORDIC

ftp://ftp.ti.com/pub/graph-ti/calc-apps/info/cordic.txt

How CORDIC was implemented on the HP-35 with details on the bit-shifting operation

http://www.jacques-laporte.org/Trigonometry.htm

The ubiquitous Wikipedia entry

http://en.wikipedia.org/wiki/CORDIC

12 May 2013

A Kvetch

The Technology Treadmill

And so another major change is coming my way. For years, we have been using Moodle as our classroom management system. Most teachers in our school just used it as a way for students to get materials they missed in class while some teachers were more advanced and used it to collect and grade some assessments.

A handful of us used Moodle to administer (and grade) multiple choice quizzes and tests. It allowed the randomization not only of the answers within each question, but also the randomization of the question order for each student. Administration encouraged that use because it would reduce the use and purchase of the "bubble sheets" we use (at about a nickle each).

Knowing that I would have such questions available for years in the future, I willing spent 5 to 15 minutes crafting each appropriate multiple choice question (see a previous blog post on that). Some of those questions had graphics in the question text and also in the answer choices. I also spent hours figuring out the Moodle way to include a semi-random number generator in my question text and how to craft appropriate formulae in the answer text, so that each student would see the same question, but with different values. For example, I could ask a typical optics question of calculating the image distance given a focal length and an object distance where the givens are random multiples of 10 or 20. I now have a growing collection of MC questions appropriate for my classes.

Just recently, administration has let loose through the grape-vine that we will be switching CMS's to Schoology. No teacher input. While it is claimed that the switch-over will be gradual (over the next academic year) and painless (supposedly we can just copy over our material from Moodle directly into the new), veteran teachers know that such major changes rarely go as smoothly as is claimed.

I have had a brief chance to try the new CMS, Schoology. My first impression is that Schoology is designed to be easy for the 95% of teachers who use only the basic features. And that is where they are spending most of their development time. That makes sense for a commercial product.

However, our old CMS (Moodle) is open-source and community supported. There are a large number of outside developed modules that vastly extend the basic product. For example, one person decided that it would be nice to be able to insert Geogebra interactive graphics easily. So that person spent some time, wrote a module to do so, and then offered it to the general public on Moodle. The Schoology is closed-source. So any new feature has to be begged-for and the company has to decide if it makes economic sense to work on that feature.

It is like the difference between Linux and Microsoft Windows. MS is easier to use, however, Linux allows you to do what you want to do if you are willing to spend some time modifying things. Windows is nice for the 95%, but Linux is better for ultra-power-users. And Linux users like to show off and share. Moodle is like Linux. Schoology is like Windows (or even worse, Apple).

For example, there is a very powerful feature in Moodle that allows a teacher to make a quiz with questions using random numbers or numbers taken from a dataset. Here is an example. "How far does a car go when it has a speed of 'x' m/s and travels for 'y' minutes?" I can chose to let 'x' and 'y' be completely random numbers, or random numbers in a set range, or numbers chosen randomly from a list I specify. Obviously, this feature is of interest almost exclusively to teachers of the mathematical sciences. It took me a while to master the syntax needed for these questions, but I found that feature a helpful one. I could tailor my online quizzes according to my educational philosophy on such assesments. Several people have asked for such a feature on Schoology for over a year, but there has been no response from the company. So, for me, a critical feature is no longer available.

Since it seems that the switch-over is going to happen, I tried the new site. Trouble. Quizzes transfered over with graphics only in the question text. No graphics in the answer choices. I also have several practice quizzes that were made as websites. As such, the files for question pages and answer choices have to have a specific file structure for the links to work. All the quizzes and all their supporting files were dumped into one file folder. The links are borked. So I will have to waste several hours recreating content instead of enhancing it. I haven't even looked at the questions where the answers are generated from a random value given in the question and an appropriate formula in the answer text.

I don't have high hopes that these problems will be fixed when we officially switch over. I am the only one at my school using these advanced features of our current CMS. I am sure the company at Schoology will look at the problem and then issue a shrug of the shoulders. It will be left to me to sort things out. So, instead of writing new questions that try to get students to think before reaching for a calculator, I will have to rewrite the old ones and try to figure out the Schoology way of doing things.

There is one concession to my plight. Through a grant, my school is hosting a weeklong summer work session for a core of teachers to pilot this new CMS. We will get together and try things in a workshop setting, and we will get a stipend. So at least I will get some monetary compensation for my pain. I hope it works out to at least one-tenth minimum wage for all the time I will have to spend implementing the change-over.

Addendum

The new CMS company does have a support site. I looked there to see if other users had some of the same concerns as I. Some do, however, it has been over a year since the first request was made for the random number use in quiz questions. Nothing yet. I have no hopes for the future. I

Subscribe to:

Posts (Atom)